Why every security team needs Semgrep Assistant

Semgrep Assistant does what no SAST tool can do - it reasons about the context surrounding an unsafe code construction to:

Identify and filter out clear false positives

Generate tailored, step-by-step remediation guidance for developers and security engineers

It’s easy to spot-check and validate Assistant suggestions for triage/remediation at scale - it’s orders of magnitude faster than arriving at these decisions individually and manually.

Assistant also codifies tribal, organization-specific security knowledge so teams are never wasting cycles on an issue that has already been addressed (Assistant learns from previous fixes and triage decisions).

TL;DR: Assistant gives back thousands of hours to developers and security engineers, meaning AppSec teams can finally spend their time on the important things that only they can do.

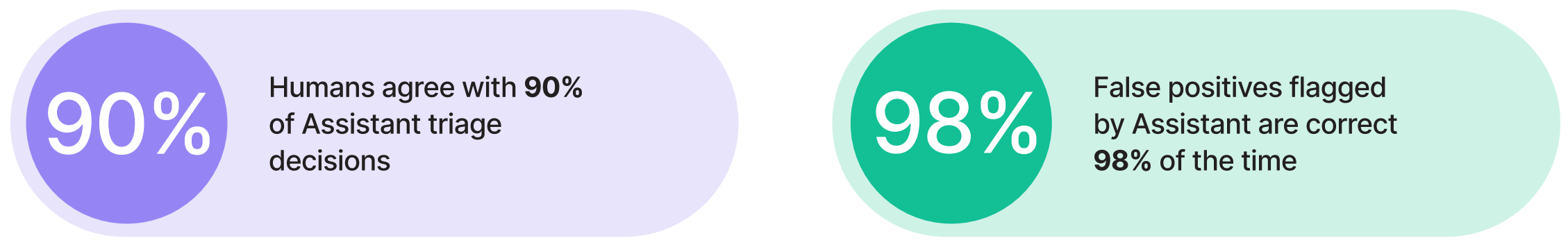

The proof is in the pudding

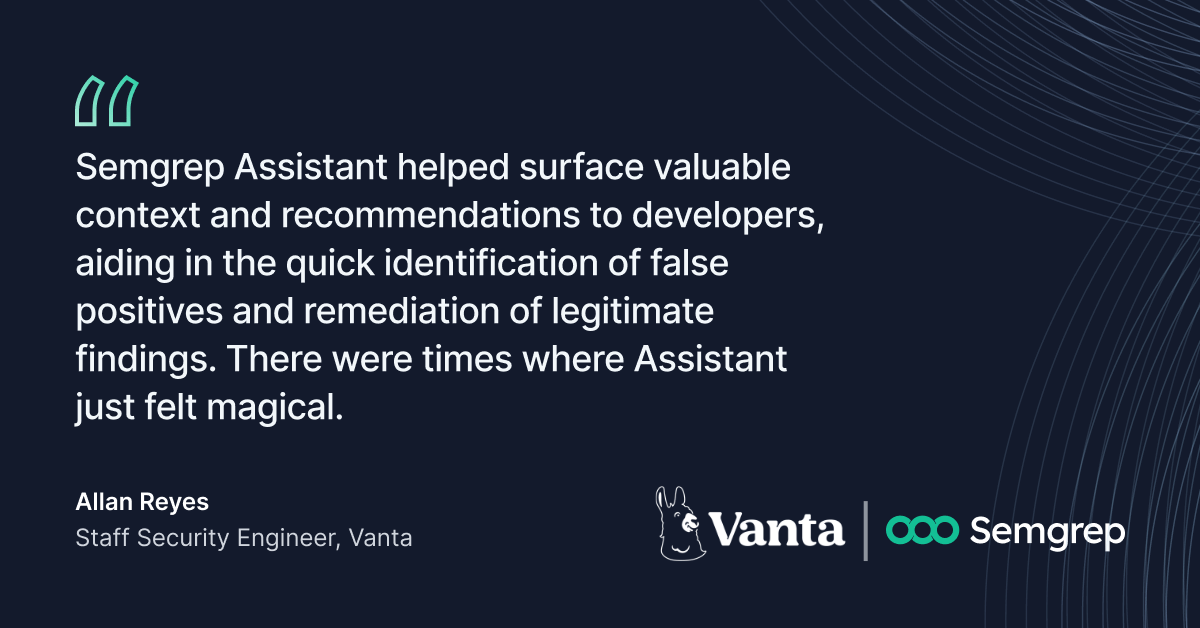

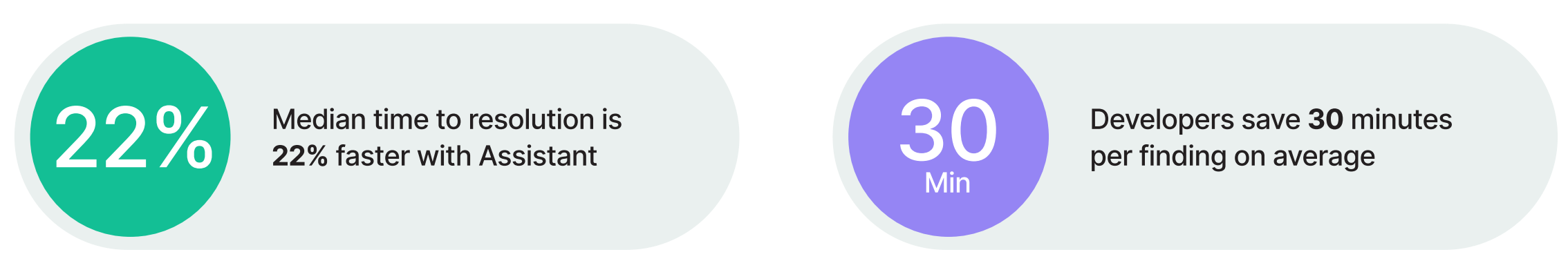

Semgrep Assistant has saved developers and security engineers at companies like Vanta, Figma, and Webflow over 10,000 hours of AppSec and developer time - this is a conservative estimate!

To learn more about these metrics and the methodology behind them, check out our solution brief for Semgrep Assistant.

To learn more about these metrics and the methodology behind them, check out our solution brief for Semgrep Assistant.

What's the secret sauce? (it's not the LLM)

We could talk about this for pages, but to keep it concise:

Assistant's secret sauce is actually Semgrep's deterministic SAST engine and the non-AI bits, which LLMs can easily leverage as a tool to enable the complex, agentic reasoning needed to handle security tasks.

Similar to how ChatGPT uses Python as a tool for math, LLMs can reference the Semgrep engine and rules during its chain of thought to handle complex security tasks.

Remember, Semgrep rules look like source code - meaning LLMs can understand and even write them!

Why is this important for enterprises? It means Semgrep's functionality (and by extension the security program of a customer) will never be beholden to a single model provider.

The challenges of implementing AI in an enterprise

While LLMs offer incredible potential, organizations still face several challenges in adopting them, particularly around data privacy and compliance. As AppSec teams look to integrate AI tools into their workflows, they must navigate a range of policies and regulations that vary from one organization to another. Some common questions that arise:

Which models and vendors are approved for use?

What data is shared with these models?

How long is the data retained?

Is code or data used to train or fine-tune these models?

How Semgrep Assistant accommodates data privacy concerns

One of the most pressing concerns is whether sensitive data - such as source code - is used to train AI models. Semgrep takes data privacy seriously and has designed Assistant from the ground up with this in mind:

Your data is never used to train models. Semgrep’s agreements with providers like OpenAI and AWS Bedrock ensure that customer code and data are not used to train LLMs.

Zero data retention at OpenAI. Semgrep’s agreement with OpenAI ensures that no customer data is retained on their servers beyond the time needed to return a response.

Minimal data retention for enterprise customers. For enterprise users, Semgrep offers a minimal data retention policy to further limit how much data is stored. With this policy, customer data, including code and prompts sent to Semgrep Assistant, is not stored in external systems like Amazon S3 or any logging tools.

Flexible model selection

Another standout feature of Semgrep Assistant is flexibility when it comes to model selection. Enterprise customers can choose from a variety of options to meet their unique needs and compliance requirements:

Bring your own API key for OpenAI or Azure OpenAI.

Use AWS Bedrock to access models from AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon itself.

This robust model selection enables organizations to maintain control over which LLMs they use, ensuring compliance with internal policies while always benefiting from the best models available.

Read our Assistant privacy overview!

Semgrep Assistant is not just a game changing solution for enterprises, it's a compliant one.

We’ve summarized our approach to LLMs and data privacy in a document that you can easily forward to legal or procurement teams if they're interested in learning more about how Semgrep Assistant addresses the standard concerns around LLMs and data privacy.